When AI code assistants become the development team’s "third variable", can we still program gracefully?

At three o’clock in the morning in Shanghai Zhangjiang, Li Hang, a back-end engineer at an Internet company, stared at the 13th error suggestion popping up on the screen from GitHub Copilot, and for the third time slammed his coffee cup on the desktop. This seemingly omnipotent AI assistant is now messing up his Spring Cloud microservices architecture with bizarre indentation formats and outdated API calls.

This scenario is being played out at high frequency in the editors of millions of programmers around the world. 2024 Developer Summit data shows that 89.6% of tech teams have deployed AI programming tools, but more than 60% of project leaders confess: these overly clever "digital colleagues", are creating new engineering dilemmas. ### I. "Specification pollution": AI set off the code chaos movement

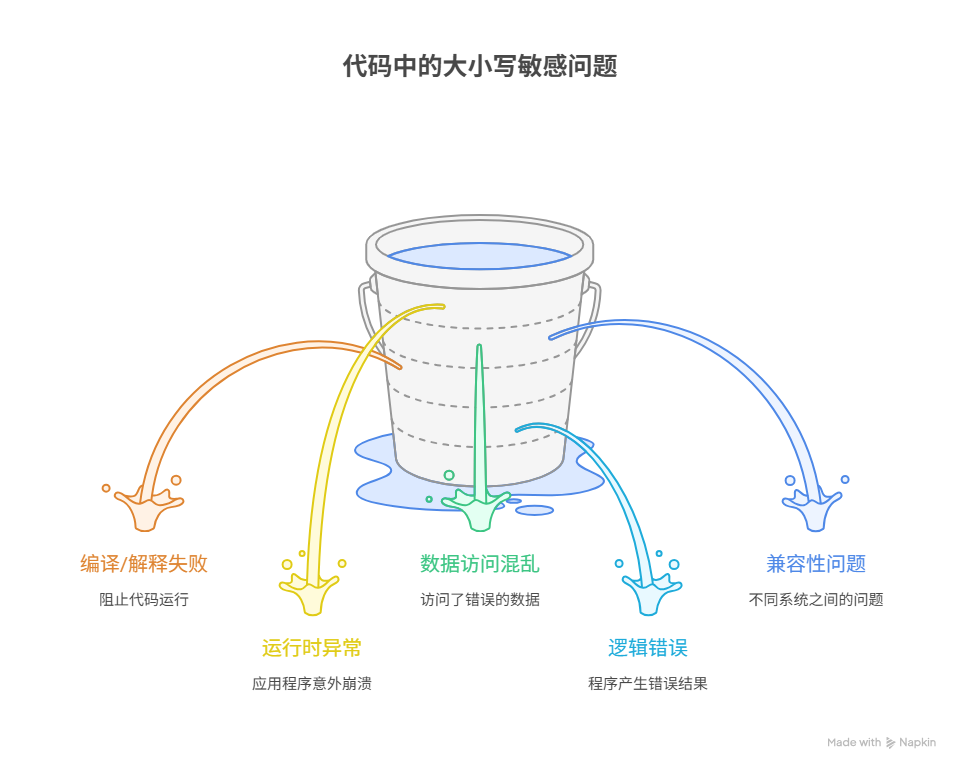

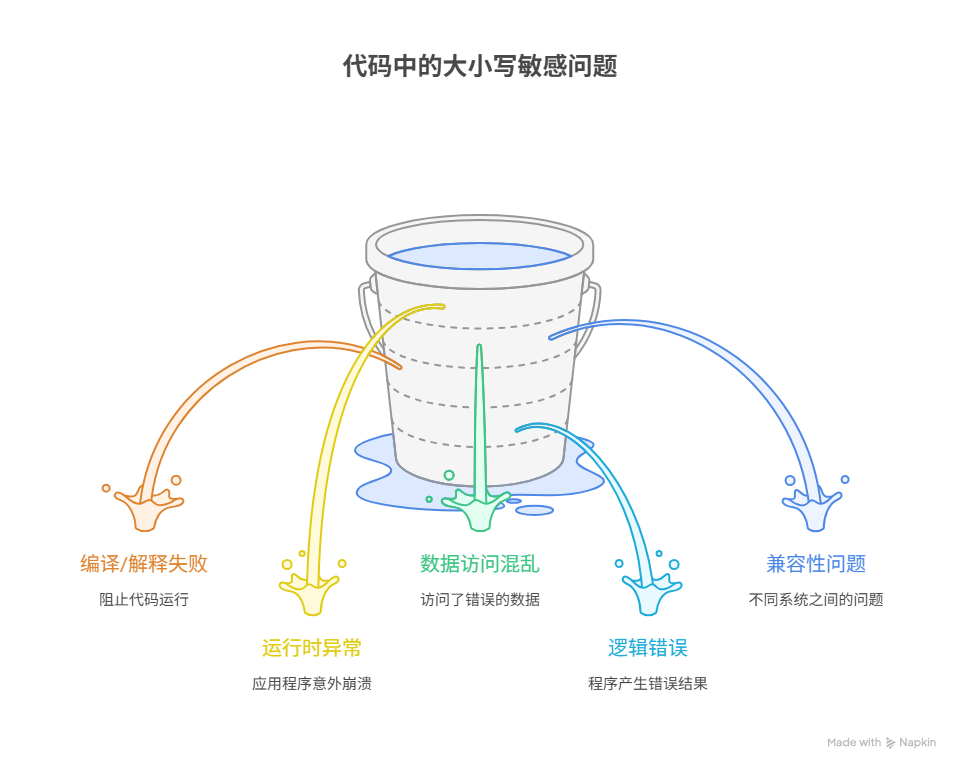

When Copilot was caught using code snippets of the GPLv3 protocol in a PR submitted by an open-source community, the whole industry was shocked to realize the deconstructive power of AI assistants on coding specifications. The technical director of a head e-commerce company showed us a set of comparative data: three months after accessing the code assistant, the personalized named variables of team members surged by 43%, the use of unconventional design patterns rose by 27%, and the Code Review pass rate plummeted by 19 percentage points.

“The AI assistant is like an intern who hasn’t read The Tao of Code Neatness.” The analogy of an architect at Ant Gold Service is painfully accurate. In a blockchain company in Hangzhou, the technical director even found that AI tools used by different programmers had generated three different serialization schemes for the same DTO entity class. This micro-level style tearing is eroding software engineering’s most precious collective rationality.

II, "attenuation amplifier"

in the knowledge transfer chain

Silicon Valley, a SaaS company CTO showed us a shocking knowledge map: when the founding team left, the engineers who took over the need to face not only 200,000 lines of legacy code, but also with the code accompanied by AI transformation of the " mutated documents ". Those should be clear requirements trajectory, now entangled with machine-generated comments, auto-complete interface documents, as well as deep learning spawned "pseudo-design patterns".

More fatally, the intervention of AI tools has accelerated the process of knowledge decay. A self-driving team in Beijing found out during the handover that what their former colleagues had left them was not a complete domain model document, but a history of 317 cue words from conversations with Copilot over a period of three months. This "middle tier knowledge" explosion makes software maintenance costs rise instead of fall.

III, "human-computer gaming" era of the law of survival

Shenzhen a game company’s technical review meeting, the person in charge of Wang Wei put forward a counter-commonsense point of view: the AI era more need "old school programmers". Her team has spent half a year verifying a cruel fact: only when Senior engineers are present at the gatekeeper, the AI assistant’s wrong suggestion detection rate can be controlled at less than 3%; and given to Junior engineers to use alone, this number will soar to 28%.

This confirms Google Brain researcher’s assertion that excellent cue word engineering capability is essentially a holistic understanding of the software development life cycle. The background data of an AI programming tool in Hangzhou shows that high-level developers adjust cue words 7.2 times more often than beginners, and they know how to use "constraint syntax" to frame the space for AI to play, just like putting delicate reins on a wild horse.

IV. When the "digital intern" meets the human goalie

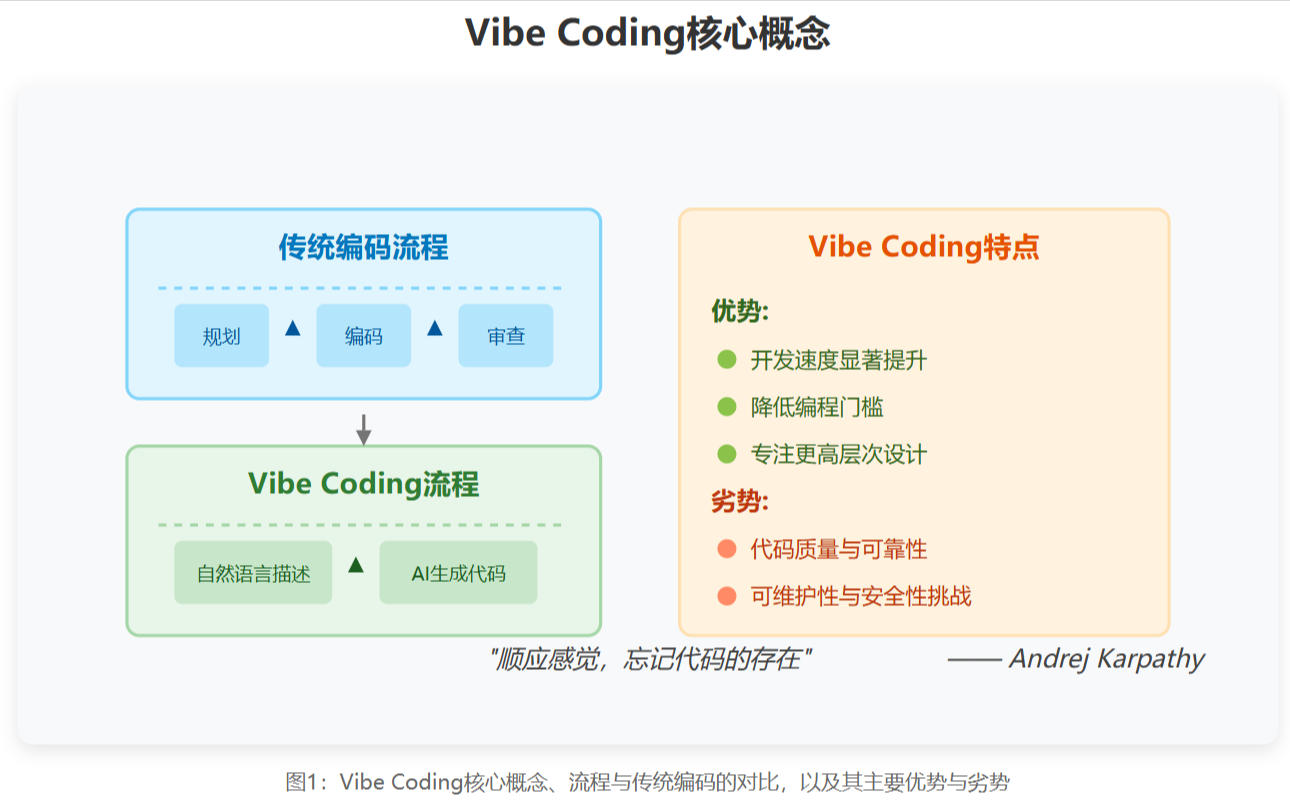

In a multinational tech company’s pair programming experiment, there was an intriguing division of roles: the AI assistant took on 80% of the syntax layer work, while the human developer focused on strategy selection at the architectural level. A side effect of this division of labor was that the depth of understanding of framework principles among young programmers decayed at a rate of 2.3% per month.

But the other side of the coin is just as bright. Statistics from an open-source community show that properly domesticated AI assistants can increase the efficiency of repetitive code output by 240 percent, giving developers more energy to devote to truly creative work. As Li Hang eventually realized, “Instead of letting AI write code, we should let it help us manage our technical debt – those refactoring plans that are always on the TODO list now have an executor online 24/7.”

At 4 a.m. in Zhangjiang, Li Hang turned off Copilot’s autocomplete function. In the suddenly quiet IDE, every character he hand-knocks down is a reminder that when AI becomes the irreversible "third variable" of the code world, the real grace may lie in always knowing exactly when to hand-press Ctrl+S.