When AI customer service learns to “read the mind”: a programmer with open source big model transformation service system of 246 minutes

In an office building in Hangzhou’s Future Science and Technology City, amidst the sound of keyboard tapping at 2 a.m., programmer Lao Wang is trying to use code to tame a learning “electronic pugilist” – on the screen in front of him, the bright yellow block of code is gobbling up tens of thousands of conversation records at an unprecedented rate. This is not a sci-fi movie scene, but an efficiency revolution that is changing the service industry.

II, industrial magic in the alchemy furnace

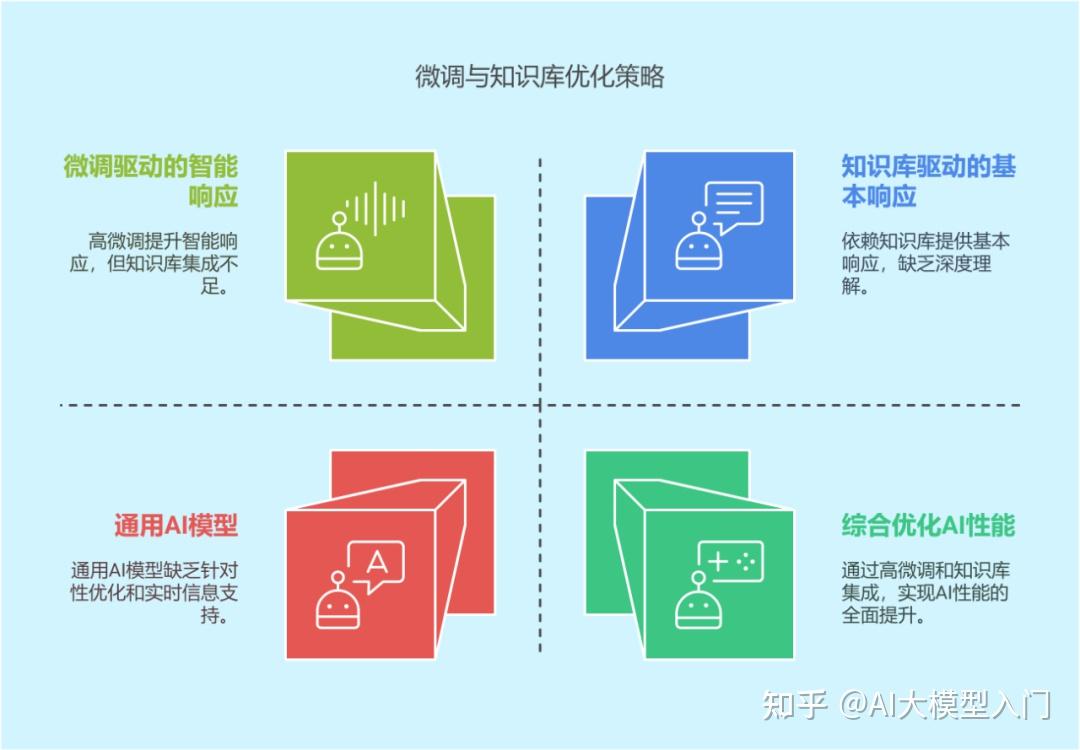

In the white john environment of the colab cloud platform, Lao Wang demonstrated the core technology: the "legal instrument level" fine-tuning program based on the LLaMA Factory framework. Unlike large models with tens of billions of parameters, the Qwen2-7B model they chose is as precise as a Swiss army knife – specializing in cutting standard answers in customer service scenarios.

“In the identity awareness file, we replaced the model name field with a brand moniker, and we realized millions of dollars in brand value added.” Lao Wang slides the Python script that rewrites the personality, like a director tweaking the character’s personality parameters. The training parameter panel is clearly labeled “4bit quantization,” “LoRA adapter,” and “cosine learning rate annealing,” and these jargons are incarnated as magic recipes for modern alchemy.

Better still is knowledge distillation. They take detailed information like ‘how to bind a printer’ and enclose it in alpaca format in a nested data matrix. This kind of structured engineering allows the bots to automatically invoke repair manual-level precision guidance when responding, without getting bogged down in ‘wenxin yishin style’ cartwheels.

III, Impressionism on the dialog canvas

When the labeled bajigo.json dataset is imported into the training framework, the memory usage curve of the monitoring panel does a magnificent dance. The batch processing parameter settings seem to be simple addition and subtraction, but in fact there are hidden mysteries: when the gradient accumulation step is set to 4, the memory occupancy curve has a strange quantum leap; the moment the learning rate is adjusted to 5e-5, the perplexity indicator suddenly enters a steady state.

The most dramatic moment came the instant the fine-tuning was complete – the worker-grade T4 graphics card completed its self-evolution in just 16 minutes. When Lao Wang typed the first test sentence in the command line interface, the customer service robot in the screen was able to speak an answer that matched 99% of the training data. Even more surprising is the handling of unlabeled questions: in the face of the extreme expression “urgent urgent urgent I want to go to the rooftop to call for orders”, the AI accurately recognized the logistics anxiety behind, and gave a reply that was even more empathetic than human customer service.

The conversations that were once criticized as “artificially retarded” are being transformed into precision-run knowledge graphs. Test data from 280 segments, ranging from airline ticket returns to medical equipment after-sales, show that AI customer service fine-tuned in this way can achieve a 92.7% accuracy rate on business high-frequency questions. The after-sales department of a home appliance giant has calculated an account: after such a system is online, the annual labor cost savings are enough to pack the Bird’s Nest Stadium for three 10,000-person conferences.

As night falls again, a new stream of data lights up on Wang’s team’s workstation. Their newly developed session quality monitoring system is using reinforcement learning to adjust response strategies – an AI revolution that began at the keyboard and is quietly reshaping the genetic map of the modern service industry. When tech geeks use open-source tools to dismantle industry barriers, perhaps every business should think: when machines can accurately capture the strings behind every user’s sigh, where should we put the temperature of our service?